16 Days: AI and Technology, not just for the rich

For years, we’ve been sold a dangerous myth: that AI and technology belong to the rich, the educated, the elite. That only a small, privileged percentage of society gets to shape the future.

But that narrative is not just misleading, it’s harmful. And it’s holding us back.

Whenever conversations about AI, digital tools, or emerging tech arise, there’s a familiar pushback: “But some communities don’t even have electricity.” “People in rural areas won’t understand this.” “Technology just causes more harm.” These arguments are used to maintain digital inequalities, not look for ways to improve access.

The real question is: Why are we refusing to prepare young people for the digital age they’re inheriting? Why aren’t schools prioritising digital literacy and citizenship and online safety? If learners aren’t taught how to navigate the internet, how to avoid scams, how to understand the digital world, or how AI shapes their future, how will they survive the world we are already living in? Pretending technology isn’t here doesn’t protect people. Education does. Access does. Inclusion does.

At GRIT, we’ve seen firsthand how technology can empower not exclude. Through co-creation workshops with youth, sex workers, Deaf communities, and survivors of gender-based violence, we’ve shown that tech doesn’t need to come from “white men in fancy offices.” It can come from the people who can benefit from it, shaping tools that reflect their lived experiences.

This is where GRIT proves that technology can be for everyone. The GRIT App is a free, accessible, survivor-centered safety tool built with communities, not imposed on them. It exists because survivors, youth, sex workers, Deaf communities, and grassroots leaders told us what they needed most and we listened.

The app’s Panic Button gives you access to free emergency response. Its encrypted Evidence Vault lets users store videos, voice notes, screenshots, photos, and documents safely for future legal cases or protection orders. This feature was created because community members said, “I don’t know where to store my evidence safely.” The Support Services Directory offers information on shelters, clinics, legal organizations, psychosocial support, LGBTQIA+ friendly services and other emergency contacts written in plain, simple language. Because safety information should never be complicated and should be available to everyone.

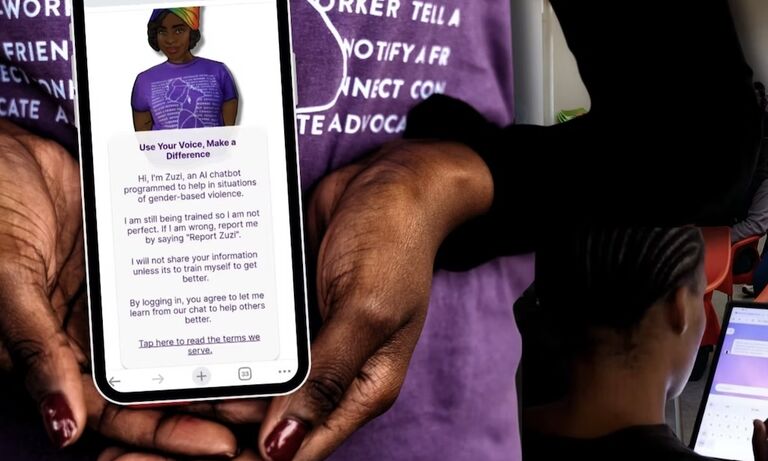

And then there is Zuzi, a first of its kind community created AI chatbot addressing gender-based violence. Available 24/7, the bot gives guidance, emotional support, practical steps for safety, information about rights, and referrals to real human services. Zuzi listens without judgment and speaks in South African languages so that people can access help in language they can understand and so easily use. Most importantly, the bot is co-created through a deep, participatory process where communities shaped the chatbot’s personality, content, and responses.

GRIT’s co-creation model is at the heart of everything we build. We don’t sit in offices imagining what people need; we go into communities. We sit in youth hubs, Deaf schools, township halls, and safe spaces where survivors gather. We work side-by-side with community leaders, the people who live the realities we aim to address. We test features, gather feedback, rebuild, and return again. This is how trust is built. This is how relevant tools emerge. Through this process, new features like the Report Services tool were created for individuals who needed a safe place to document mistreatment by clinics and support centers. It is technology shaped by lived experience. These innovations were not born from Silicon Valley. They were born from townships, community halls, marginalized groups, and lived realities. GRIT is living proof that technology belongs to everyone, especially the people often left out of the tech conversation.

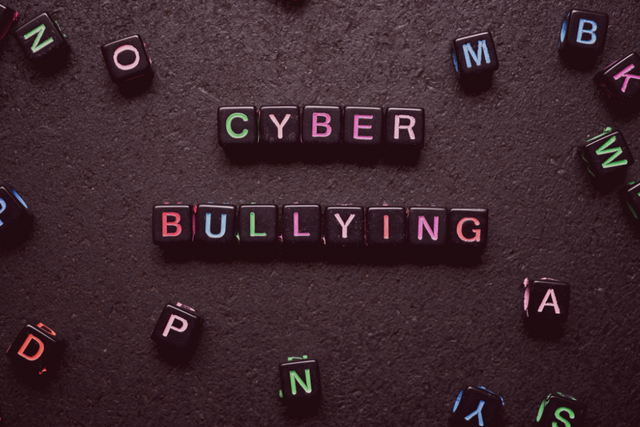

As the digital world evolves, we are now talking about TFGBV (Tech-Facilitated Gender-Based Violence) more often. But here’s the truth: policy and lawmakers are still grappling with how best to respond and looking at the adequacy of existing laws in dealing with this. We have conversations, yes. But conversations are not protection. And even when safety policies exist, they’re written in such complicated English that even adults struggle to understand them, never mind children who are already online. If we want communities to be safe, then we need:

- Simplified safety guidelines

- Tech companies held accountable

- Policies co-created with the people affected

Not top-down rules that ignore real life.

There is a popular belief that AI is “designed to be biased.” Bias exists, yes because humans built the systems. But instead of accepting that as the final truth, why aren’t we challenging it?

Well… I am. GRIT is. We are questioning how these systems are trained. We are creating tools that represent South African realities. We are including voices that are usually silenced.But here’s the thing: To challenge a system, you need a seat at the table. So let me ask: Will you give us the platform? The biggest obstacle we face is the loud chorus of people saying, “AI is dangerous,” “AI is stealing jobs,” “AI is ruining everything.”

Fear has become so loud that nobody wants to consider the possibility that AI could also protect, educate, and empower. And that silence is dangerous because when marginalized communities are scared away from adopting new tools, they’re left behind again.

AI can absolutely cause harm. But AI can also:

- help survivors access justice

- translate critical information instantly

- open economic opportunities

- democratize education

Technology is not the enemy. Exclusion is. Lack of education is. Silencing community voices is. If we don’t teach digital literacy now, we are setting the next generation up for failure. If we don’t talk about AI openly, communities will enter the digital age unprepared, misinformed, and unprotected. If we don’t include marginalized voices in tech design, AI will simply replicate the same inequalities we’re fighting against in real life.

The truth is simple: AI and technology are not reserved for the rich. They are designed for the rich. It is our responsibility to build tech for everyone. People like us in South Africa.. Let’s not wait for permission. Let’s take our seat at the table. Let’s build tech that includes, protects, and empowers.

Because the future isn’t coming, it’s already here.

And we deserve to be part of it.

Comments

Related news